| |

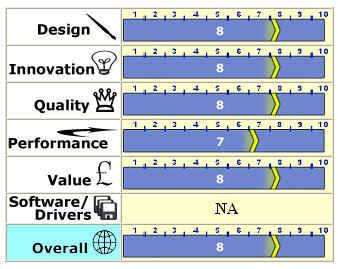

ผลการทดสอบ Pixelview Geforce FX5900 XT Limited Edition

Introduction:::... If there's one great thing about the introduction of new, high-end graphics cards with price tags that look like telephone numbers, it's that the products that came before them must now cower in humiliation and lower their prices suitably to reflect the new order and their diminished status. Sounds grim, but in truth these "old" style cards are still plenty fast enough for the vast majority of the gaming population, and not just for yesterday's games either. Sure they may struggle with some of the big-name games that are written to push your hardware beyond its limits, but games like those aren't released every week. Most games will run just fine even if it means easing up on some of the graphical glory and dying amongst slightly less convincing shadows or getting blood all over your bump mapped walls rather than your displacement mapped walls. Take a look around 3DVelocity and you'll notice that I haven't personally reviewed many NV3x based graphics cards, and this wasn't because we couldn't source any, it was because I had decided that there were just too many architectural idiosyncrasies for me to give any card based on this GPU my wholehearted recommendation. Well, today I'm breaking my silence, but I'm not doing it because I've suddenly taken a liking to NV3x, but rather because my eye was taken by what the guys at Prolink had done with it. Their GeForce FX 5900 XT Golden Limited looks unique, has a good price and, because it's part of NVIDIA's plans to dump the last of its previous generation chip stocks, won't be available in huge numbers. Let's do the specs:

The 5900XT is a slightly strange beast. With its four pipelines each featuring dual TMUs, and a price that put it pretty much up against NVIDIA's already reasonably successful, but undoubtedly slower 5700, it became the big value for money choice for those prepared to sacrifice a little pixel pushing power in exchange for a substantial cash saving. While Prolink are a large, well respected multimedia company they don't seem to have as high a profile as some of their competitors when it comes to graphics cards. This must I assume be down to a lower visibility marketing strategy as their products have always struck me as being innovative and of good quality. Anyway, let's get to the card itself and see what Prolink have done to try and get their product noticed in a market every company around seems to have a slice in. This way please:

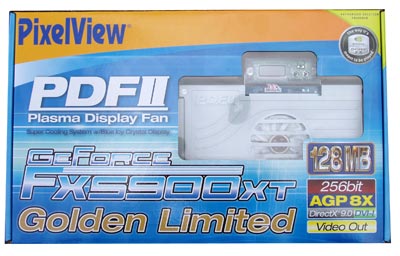

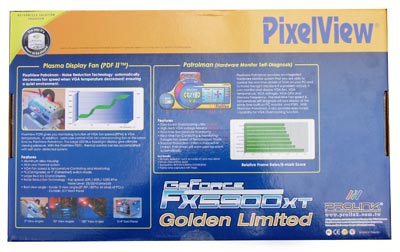

The Box:::... Prolink's box sticks faithfully with their corporate colour scheme, and like a Soho adult shop, the windows gives a glimpse of the goods on offer once you get inside.

If that doesn't quite get you to take the bait, flipping the box over sets out all the vital statistics, the talents and the delights you're getting for your money. This foxy lady wants you bad, and if I don't stop with all the innuendoes I'm heading for trouble! This is a family site after all.

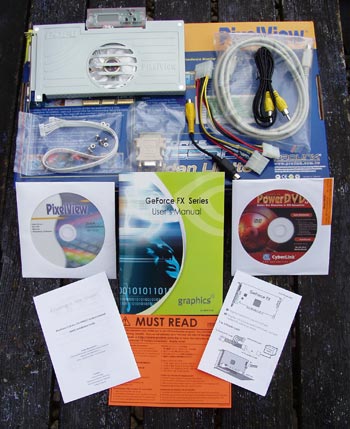

In terms of software the bundle is best described as adequate. The price is kept down by bundling no games, old or new, but there is a full copy of PowerDVD included which I consider an essential inclusion for any video card able to handle DVD playback. As you'd expect from a video card with TV out, there are a couple of cables in the box including an S-Video cable, an S-Video to composite converter and a composite cable. There's also a power splitter so you can provide juice to the card without sacrificing a Molex connector. Finally there's the literature which is sufficiently detailed but unlikely to win any prizes.

Without a doubt the star attraction is the video card itself. Before you even plug it in and power it up you can see there's something a little different going on. The cooling fan and heat sink are encased in a full sized shroud which also houses a multi-angle LCD display which plus in to the top edge of the card using a short data cable. I was a little surprised to see that Prolink had made the LCD housing from transparent plastic considering most of the publicity shots show it with a metal housing.

A definite weakness is that the housing has no back to it. Why is this a weakness? well, because you need quite a lot of pressure to operate the buttons on the front which means steadying the back with a finger as you press.

The connectors are nothing out of the ordinary except perhaps for the S-Video out. There's the usual pairing of a VGA and a DVI out with a dongle supplied so you can use it for a second analogue display should you wish.

The fan features a clear hub that displays the coils but it's party trick only becomes apparent when it's powered up.

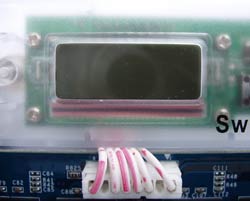

The LCD display information on the GPU temperature and fan speed. It's side-lit from the left and right by two very bright blue LEDs that tend to glare a little when you're reading the display but which also serve to add a fair amount of lighting to your case's innards which may have been the motivation behind the switch to a transparent housing.

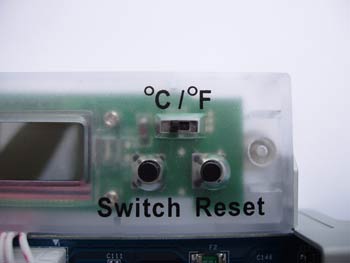

In addition to the LCD display there are two pushbuttons and a small selector switch. The switch should be fairly self-explanatory, it's function being to display GPU temperatures in either Celsius or Fahrenheit.

The display isn't particularly clear or contrasty, though to be fair it's not as bad as it appears in the picture. It is however perfectly readable from a reasonable angle of view.

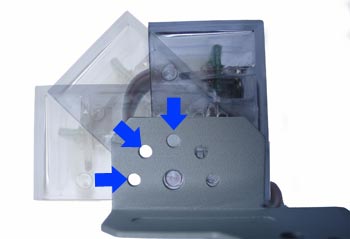

And the angle of view can be varied depending on your case orientation. There are three positions available with locking holes which locate with small lugs on the side of the housing.

It's hardly high-tech, and I think detents would have operated much smoother than actual holes, but it works and you're unlikely to be changing the position every few minutes so it's not a massive problem I suppose.

An finally there's that fan I mentioned. Prolink call it a Plasma Display Fan, most of use will know it under its more common name, a blue LED fan.

Installation:::... With the full shroud removed things look a little more traditional. The RAM is as naked as the first lambs of Spring and the GPU has a fairly standard Aluminium sink/fan assembly on top. To their credit Prolink haven't skimped on the thermal grease although it's your run-of-the-mill silicone based gloop.

Memory comes in the shape of 128MB of fairly fast 2.5v Hynix 2.8ns chips. I've seen cards tipping up with 2.5ns modules on them but on the while they don't seem to be offering much of an overclocking advantage. Some retailers seem to be claiming 2.2ns memory but they are mostly reading the speed from the wrong place in the part number. On the chip below, the speed isn't the "22" at the end of the second line down, it's the "28" in the "F-28" part of the line below that, so if anyone tells you they offer 2.2ns memory on their cards check for yourself first.

Okay, so you like the idea of temperature and fan speed readouts but you have no window in your case, or it's under your desk. Well in this situation you can use the supplied 5.25" front bay housing and the extended data cable to relocate the LCD display to the front of your case. Shame they didn't design the housing so the display could swivel in there too eh?

nstallation:::... Prolink keep the driver installation procedure as painless as possible by using a simple installation interface screen. Everything from the e-manual to updating your version of DirectX can be accessed from here.

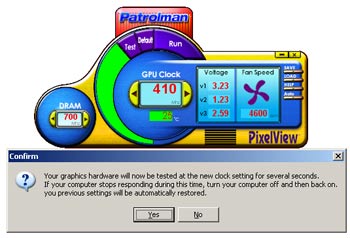

Also available from here is "Patrolman", Prolink's monitoring and overclocking utility. A handy feature is that you can set and save specific card settings for specific games or situations then recall them when you like.

As with CoolBits, around which it's almost certainly built, you need to test your new settings.......

.......before you complete the procedure and apply them. Although I didn't have a lot of time to play with this app, it seems to work as expected which isn't always a given for these in-house created tweaking programs.

Test System Setup:::... Epox 8RDA3G

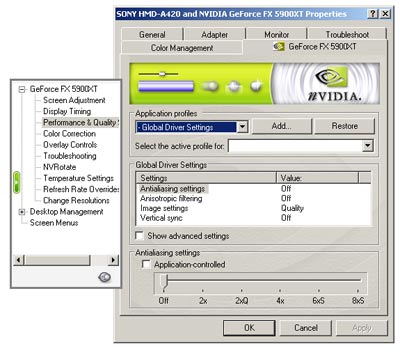

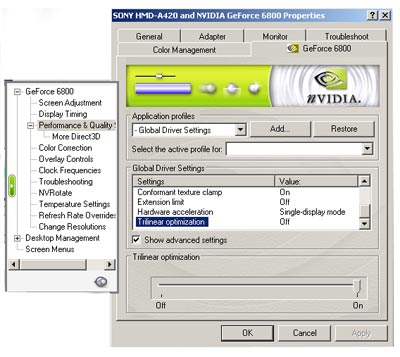

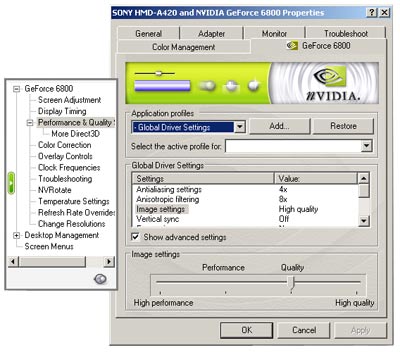

(nForce2 Ultra400) (Kindly supplied by Epox) Throughout testing the following driver setting was used: Antialiasing, Anisotropic Filtering and V-sync are all set to "OFF"

Trilinear Optimisations are left at the default "ON" position unless stated otherwise. (This setting can only be disabled using NV4x GPUs).

The image quality slider is set to the "Quality" position unless stated otherwise.

We've taken a slightly unusual route with this review. Because I have received a few emails on the subject, I decided to compare performance to the previous flagship in NVIDIA's lineup, the GeForce 4 Ti4600. Let's get to it! CodeCreatures:::... Built around the DirectX 8.1 API, CodeCreatures remains a genuinely taxing benchmark that makes use of both vertex and pixel shaders. This isn't a new benchmark but it remains a burden to even modern hardware and is less likely to have been given any particular driver attention.

With an average FPS of 44.6, 34.9 and 27.7 at 1024x768, 1280x1024 and 1600x1200 respectively, we still don't hit my magic standard of at least 30FPS in all resolutions. It does however shape up well against the Ti4600's 31.5, 25 and 19.9 FPS results. Gun Metal 1.20S:::... In their own words - "The Benchmark intentionally incorporates features and functions that are designed to really push the latest DirectX 9.x compatible 3D accelerators to the limit and therefore the speed of the benchmark should not be taken as representative of the speed of the game. " Think Transformers on steroids.

And kind of as we'd expect, the 5900XT gives the Ti4600 a pretty comprehensive kicking. D3D RightMark 1.0.5.0 (Public Beta 4):::... I'd already configured some of the tests for a later review and with the next generation of games on our doorstep (hopefully) there seemed little point in dumbing them down again. Because there are no default tests I can supply the test configuration files to anyone who'd like to duplicate our tests exactly. Just email me if you need it.

Legend

Speed or no speed, the issue that counts here is the number of tests that the Ti4600 simply isn't equipped to carry out. PS2.0 shader performance has certainly improved since I last took a look at this generation of GPU but it's certainly not what I'd class impressive.

Tomb Raider Angel of Darkness:::... Tomb Raider Angel of darkness was, in my view, a lousy game but a great benchmark As one of the first DirectX9.0 games available it gave us a chance to see how hardware was coping with the demands of the next generation of gaming. We use two different timedemos created in-house so these results aren't comparable with results you may see on other sites. I crerated these timedemos, based on the Prague and Paris levels, for TrustedReviews and you can read more about them in the short article I wrote HERE. Again, I can supply these timedemos if you want them. They're not master classes in gaming but they weren't meant to be. There are lots of pauses to give us opportunites for screenshots along with unusual jumps and aimless wandering all performed with a purpose so that certain elements of the background quality can be examined. They may seem random but there was a purpose and they are perfectly good for framerate benchmarking.

A lead to the Ti4600 is flattering as the benchmark asmost certainly uses fallback routines to render the scenes it can't render natively. The worry here is that clearly the 5900XT is slower running features it was designed to run than the Ti4600 was at running features it also was designed to run.

AquaMark3:::... They tell us that "AquaMark3 allows PC users to easily measure and compare the performance of their current and next-generation PC systems. It provides a reliable benchmark which helps users to configure their systems for the best gaming performance. The AquaMark3 executes a complete state-of-the-art game engine and generates 3D scenes designed to make the same demands on hardware as a modern game. "

I think AquaMark is as good a representation of the type of games we'll be seeing make it to market for the next twelve months or so. There will be axceptions to this with particularly demanding titles like DoomIII appearing, but I think these will be the exception rather than the rule for a little while yet. The 5900XT's advantages are clearly evident from the results and those with any doubts about the advantages to uprading from the GeForce4 or earlier should now be pretty convinced.

X2 - The Threat:::... The Xฒ - The Threat rolling demo also includes a benchmarking feature so you can check out how well your system runs this visually stunning game.

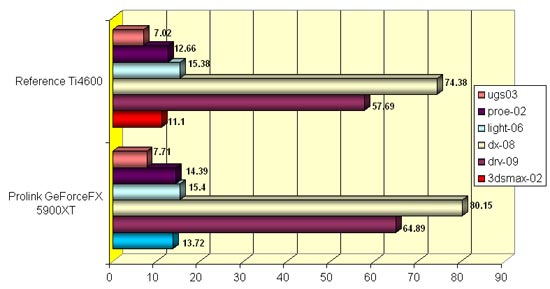

SPECViewperf 7.1:::... As they explain it so well, I'll duck out again and let them. "SPECviewperf parses command lines and data files, sets the rendering state, and converts data sets to a format that can be traversed using OpenGL rendering calls. It renders the data set for a pre-specified amount of time or number of frames with animation between frames. Finally, it outputs the results." Currently, there are six standard SPECopc application viewsets:

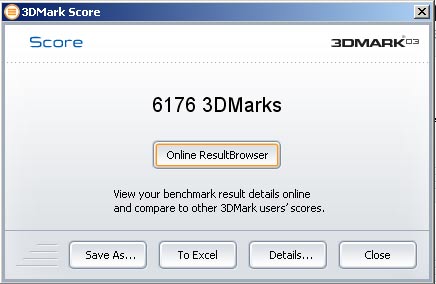

3DMark03 Build 340:::... I'm not sure if the 62.11 drivers have been certified yet, or if they ever will be, and I don't really care. We're comparing NVIDIA with NVIDIA so if they cheat they can cheat themselves! I doubt you need an introduction but just in case..."3DMark03 is the latest version of the highly favoured 3DMark series. By combining full DirectXฎ9.0a support with completely new tests and graphics, 3DMark03 Pro continues the legacy of being industry standard benchmark. "

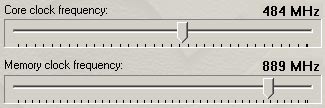

Overclocking:::... With a lot of the 5900XTs on the market seemingly hitting 500MHz+ on the core clock, a final setting of 484MHz stable was perhaps a little disappointing. Some of this disappointment was eased though by the memory which made it to a rather exceptional 889MHz. Pretty impressive for a memory rated to no more than 714MHz. I guess the lack of RAM sinks is no great handicap after all, and no doubt the shroud keeps air circulating close to the PCB and thus the memory chips too.

This rewarded us with a 1005 3DMark bonus over the stock 5170 3DMarks score.

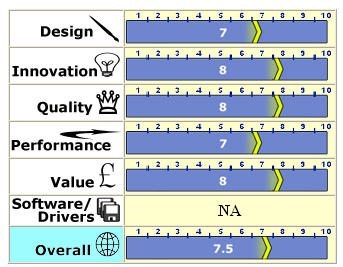

Free performance is always nice, no matter how far you can push it, and though core speeds were pegged back a little you still get performance beyond that of the stock clocked 5900XT and for a much lower price. Noise/Heat Levels :::... By actively controlling the fan speed based on the GPU temperature, it rarely got above 2900RPM in our test rig. This means very quiet operation on the whole and though it's all relative there were no causes for concern from the card's operating volume. Though not the coolest running card around, temperature were considerably better than you'd have seen from the 5800 Ultra and in general were pretty good. Some thoughts:::... I wanted to take this opportunity to speak up on an issue I've very much kept out of until now, the issue of cheating and optimisations. Although it's a subject many people, myself included, have grown weary of, I still think there are angles that haven't been covered in the great e-debate. One of the things that concerns me most is that all the protesting about game or application-specific optimisations will actually serve to rob the end-user of much needed performance gains. Modern GPUs are, and will continue to be increasingly programmable in nature. As a result, every GPU will have a preferred way of doing things, much like AMD can benefit from 3DNow! code while Intel got a boost from MMX or SSE code. Using drivers or hardware to feed instructions to a GPU in a way that lets it handle them best should NOT be an issue we all get upset about, and neither should the fact that a GPU doesn't perform quite as well when we shuffle those instructions around. This isn't cheating, this is working to your strengths. Does the fact that a Dragster slows down when you run it on regular petrol mean it's not really fast at all, is it just just cheating to give the illusion that it's fast? Unless we're all happy to go back to the days of fixed function pipelines and limited feature sets, we need to accept that tinkering with how a GPU gets its job done is likely to throw up some odd results but that these don't necessarily mean anything untoward is going on. Speak to me in Arabic and I'll get a lot less efficient too! Then there's the image quality debate. I'm sorry but if you need to grab screen shots of a scene and use Photoshop's subtractive function to see any variations between one scene and another, then so far as I'm concerned the differences are too small to really matter. Poor image quality should be noticeable to the game player, not down to a couple of macroscopic variations in the levels of filtering applied. No two artists paint a landscape the same way, and as modern graphics hardware gets more complex, so there are more ways it can interpret the instructions being sent to it. This doesn't necessarily make one right and one wrong. It just makes them different. And like the artists painting landscapes, if you don't like the way one has interpreted his scene then you buy from the other. If NVIDIA, or ATi, or any of the other graphics survivors for that matter, can give me a 10 FPS boost by reordering a few shader instructions, I damned well expect them to do it! If however this results in my enjoyment of the game being degraded by reducing the quality of the image on my screen, then I'm warning them to leave things alone or my money goes elsewhere. It's quite simple really. I genuinely don't care if renaming an executable drops the framerate so long as I can't see any visible difference on the screen, and those who keep kicking and screaming that this is cheating need to wake up and realise that it's they who will end up cheated in the end when hardware developers get so paranoid they stop looking for ways to make their architectures more efficient. We moaned for performance, now we're crying foul. That said, Microsoft now rightly or wrongly sets may of the graphics standards with their omnipotent DirectX APU, and all the features built into any given version of DirectX need to be handled efficiently by a company's hardware if it doesn't want dragging across the coals by review sites, and that's where NV3x fell at the first hurdle. NV3x was inefficient, hot and not terribly fast at handling the features needed for the next generation of games. Image quality was poor, due primarily to badly implemented filtering and drivers were clearly designed to wring out a few extra FPS at the expense of anything resembling a well rendered frame of graphics. This is why there are so few NV3x reviews on 3DV. Despite all the talk of nearly free Antialiasing and cinematic quality graphics, hardware simply wasn't powerful enough to supply the framerates that we've all been conditioned to look for while also providing the image quality we were all now claiming is our first priority. There was still a lot of balancing one with the other to be done, and while ATi got the balance about right, NVIDIA's brut force framerate mentality meant they got it wrong. Now for something a little more positive. The Prolink 5900XT is a much better proposition than any of those earlier offerings based on the same GPU or variants of it. Partly through driver improvements, image quality is now much better than before, though still far from perfect, while shader performance has seen some worthwhile increases. The basic weaknesses persist but with prices now far more affordable, things are not as bleak as was once the case. Rather late in its life this GPU is at least a viable option for those who want value for money performance and are prepared to accept its drawbacks. Conclusion The 3DVelocity 'Dual Conclusions Concept' Explained: After discussing this concept with users as well as companies and vendors we work with, 3DVelocity have decided that where necessary we shall aim to introduce our 'Dual Conclusions Concept' to sum up our thoughts and impressions on the hardware we review. As the needs of the more experienced users and enthusiasts have increased, it has become more difficult to factor in all the aspects that such a user would find important, while also being fair to products that may lack these high end "bonus" capabilities but which still represent a very good buy for the more traditional and more prevalent mainstream user. The two catergories we've used are: The Mainstream User ~ The mainstream user is likely to put price, stock performance, value for money, reliability and/or warranty terms ahead of the need for hardware that operates beyond its design specifications. The mainstream user may be a PC novice or may be an experienced user, however their needs are clearly very different to those of the enthusiast, in that they want to buy products that operate efficiently and reliably within their advertised parameters. The Enthusiast ~ The enthusiast cares about all the things that the mainstream user cares about but is more likely to accept a weakness in one or more of these things in exchange for some measure of performance or functionality beyond its design brief. For example, a high priced motherboard may be tolerated in exchange for unusually high levels of overclocking ability or alternatively an unusually large heat sink with a very poor fixing mechanism may be considered acceptable if it offers significantly superior cooling in return.

The Mainstream User There's no doubt about it

The Enthusiast ~ If

this is the card you're thinking of buying to keep you happy for the next year

or two, then I'd suggest you look at the competition's GPU offerings. As a general

games card the 5900XT is fine but you must also remember it's relatively weak

shader performance will handicap it with the next generation of shader intensive

games.

ผลจากการทดสอบที่ http;//www.3dvelocity.com

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Copyright 2002 pcresource.co.th,All rights reserved.

บริษัท พีซีรีซอร์ซ จำกัด 1112/9 ศูนย์การค้าพระโขนง ถ.สุขุมวิท แขวงพระโขนง

เขตคลองเตย กรุงเทพฯ 10110

Tel: (02) 712-0354-9 Fax (02) 382-0394

ที่อยู่ในการติดต่อสาขาต่างๆ |

สนญ | สาขา pantip | สาขา zeer 1 | สาขา Zeer2 | สาขา ฟิวเจอร์พาร์ค บางแค | ซีคอนสแควร์ | ตะวันนา | ศรีราชา | พัทยา |